- Why Securonix?

- Products

-

- Overview

- 'Bring Your Own' Deployment Models

-

- Products

-

- Solutions

-

- Monitoring the Cloud

- Cloud Security Monitoring

- Gain visibility to detect and respond to cloud threats.

- Amazon Web Services

- Achieve faster response to threats across AWS.

- Google Cloud Platform

- Improve detection and response across GCP.

- Microsoft Azure

- Expand security monitoring across Azure services.

- Microsoft 365

- Benefit from detection and response on Office 365.

-

- Featured Use Case

- Insider Threat

- Monitor and mitigate malicious and negligent users.

- NDR

- Analyze network events to detect and respond to advanced threats.

- EMR Monitoring

- Increase patient data privacy and prevent data snooping.

- MITRE ATT&CK

- Align alerts and analytics to the MITRE ATT&CK framework.

-

- Industries

- Financial Services

- Healthcare

-

- Resources

- Partners

- Company

- Blog

Information Security, Security Analytics

By Oliver Rochford, Security Evangelist, Securonix

Recent research by Picus Security showed that evasive techniques are increasingly being adopted by cyber threat actors. Out of the top ten MITRE ATT&CK techniques observed in malware in the wild in 2021, fully half were focused on defense evasion.

Our own research at Securonix on ransomware trends in 2021 paints a similar picture, with living-off-the-land techniques and human-operated attacks prevalent amongst ransomware operators.

Evasive techniques are not novel but have long been associated with sophisticated threat actors such as nation states. What is new is the widespread proliferation of evasion approaches. To better understand why this is, let’s take a closer look at one example – fileless attacks.

Evasiveness by Example – Fileless Attacks

As the name implies, what defines a fileless attack is the lack of dedicated malware or other attack code that might be used to identify and detect the threat activity. Fileless attacks have been around for a long time, but they are increasingly seen in the wild, with over 40% of current attacks now utilizing at least one or more fileless techniques.

As the table (Table 1) below shows, there are a number of different fileless attack techniques that are designed to bypass traditional security controls for attackers to utilize.

Table 1: Fileless Attack Techniques

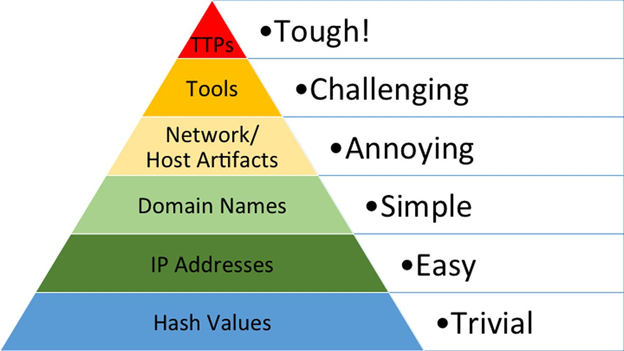

What makes fileless techniques so devastating to detect and respond to, is that they deny us the easiest and most reliable mechanism to detect an attack – the characteristics of the binary – for example the file hash. But technical indicators such as hash values or IP addresses are easiest to detect. We’re basically just trying to match text in a string or calculating a value for comparison.

Without malicious binaries, we lack reliable indicators of compromise to signify “known-bad”. And if we can’t detect threats by identifying the tools and infrastructure they are using, we must instead focus on their tradecraft and behavior.

Figure 1: David Bianco’s Detection “Pyramid of Pain”

Detecting malicious behavior is far more challenging than just matching IOCs. As David Bianco described in his “Pyramid of Pain”, focusing on detecting TTPs can ultimately cause real pain for any would-be attacker, leaving them nowhere to hide.

Signatures Versus Behavior = Precision Versus Recall

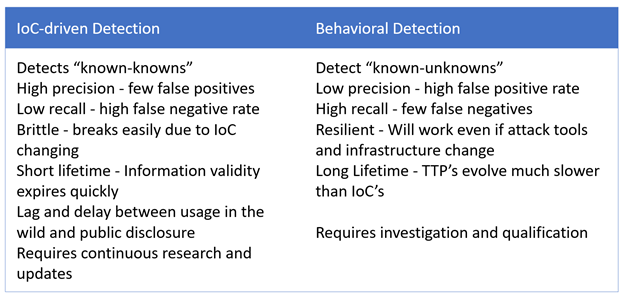

Traditional IOC-based detection methodologies work against “known-knowns”. In contrast, behavioral analysis looks for “known-unknowns”. (As a side note, outside of hyperbolic marketing, it is not possible, by definition, to detect “unknown-unknowns”.)

Both approaches excel in certain types of detection scenarios, and both incur trade-offs.

Table 2: IOC Versus Behavioral Detection

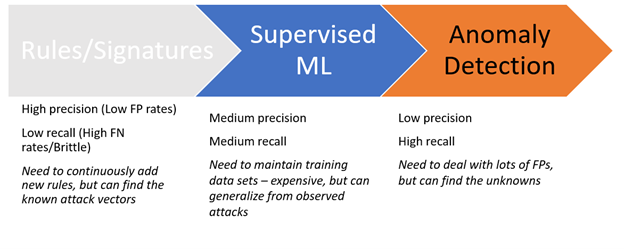

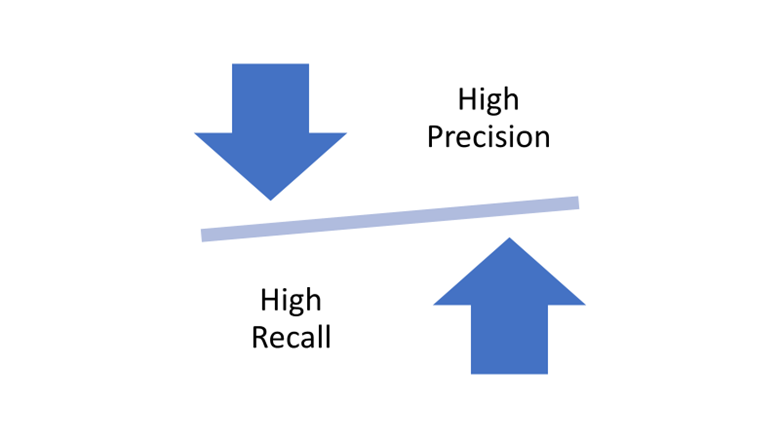

Figure 2: Pros and Cons of Different Detection Approaches

Detecting IOC’s can be done with high precision – meaning that the probability of a detector detecting a true positive is high – in the sense that it matches the search criteria, for example a file hash. IOC-based detection also returns a Boolean value – true or false – we either have a match or we don’t. The problem is that we also have low recall – the probability of finding an actual attack – essentially false negatives. Being precise comes at a price – we miss anything that even slightly deviates from the expected criteria.

Behavioral analysis on the other hand, is less precise – the detection logic is fuzzier – but it offers higher recall. Practically this means less false negatives, but also more false positives.

This leaves us with a conundrum – we need behavioral analysis to discover modern threats that are evasive by nature. But the increase in visibility comes at the expense of having to deal with more false positives.

Wait a minute! If IOC-based detection is so precise, why do I experience lots of false positives with signature-based technologies?

There were three things going on there:

- A lack of uniquely identifiable characteristics – for example a regular expression searching for a string that is present in other binaries than just the malware.

- Too simplistic logic to express more complex detection that may reduce FPs, for example a lack of IF/ELSE/AND/OR logic and overreliance on regular expressions.

- A purposeful loosening of the criteria to extend detection to avoid false negatives.

The Power of Modeling

As Joshua Neil, our Chief Data Scientist, recently explained, most solutions will try to find a balance between precision and recall, because what we really want is the best of both worlds. We can do this quite simply by moving away from considering individual detections and viewing an attack as a series of related events instead.

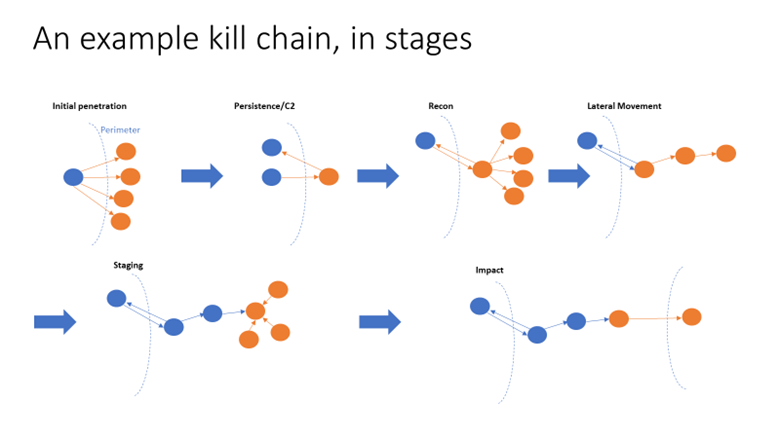

As a general rule, cyber breaches are composed of multiple different attack stages, often referred to as a kill chain, or attack lifecycle. Attackers must complete different steps to achieve their ultimate objective, for example obtaining higher privileges, encrypting files, or exfiltrating sensitive data. In some cases, the goal might even be ongoing espionage and surveillance with an open-ended end date. What matters to us is that being breached is not an event – it’s a state. Every action an attacker takes, even if it is just beaconing home to see if there are any commands to execute, creates evidence and presents us with an opportunity to catch them in the act.

Figure 3: Kill Chain Stages

We can also identify which attack techniques any given alert and event are associated with, which in turn permits us to map them to attack lifecycle phases. This provides us with a taxonomy to describe attacks. If this sounds a bit familiar, that’s because it is pretty much what the MITRE ATT&CK framework does. But we can go much further than that and use these mappings to combine and fuse the detections from different detection approaches into a coherent narrative of an attack.

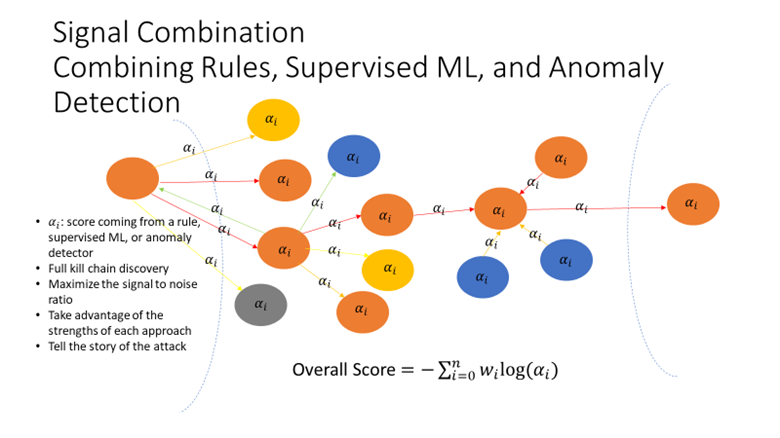

Figure 4: Signal Combination

What is even more powerful is that we can also use this to model different types of threats and attacks. If MITRE ATT&CK provides a taxonomy, threat modeling adds the ontology. More importantly, a threat model is a form of meta-detection – allowing us to fit together the different alerts and detections we generate like the pieces of a puzzle.

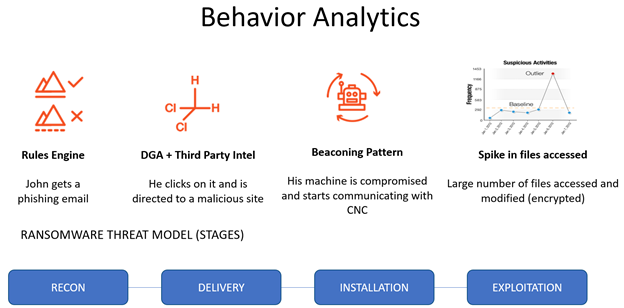

Figure 5: Ransomware Threat Model

Instead of having to rely on just a single type of detection, with the trade-offs between precision and recall, we can now have the best of all worlds by imposing a higher order of logic based on modeling.

Instead of trying to determine in binary true or false whether a breach has occurred, we can now offer a partial answer. Even if we can only match parts of our models, it is sufficient to highlight that something is starting to develop. And most of all, we can leverage IOC’s when we have them, or we can consider multiple low-confidence detections in combination when we don’t.

The data science insights we discussed are from a talk by Joshua Neil, Chief Data Scientist at Securonix. Check out what else Joshua has to say and learn more about using data-driven methods to detect nation state attacks.